TL;DR - There are several possible metrics for evaluating threat detection artifacts, with adversary pain being the only metric that gets a pyramid. I have therefore set out to correct this historical injustice. You can skip to the end of this blogpost to look on my works, ye mighty and despair.

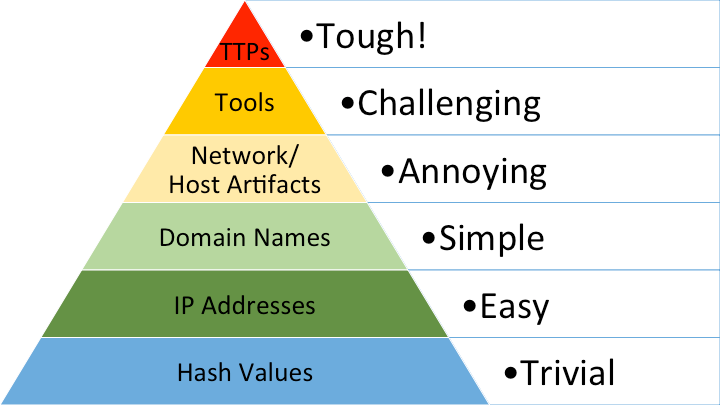

The Pyramid of Pain is well known cyber threat intelligence concept, originally conceived by David J. Bianco in 2013. For those unfamiliar, the lower parts of the pyramid list things that are widely available to threat actors and they can therefore more easily move on from when they’re “burned”, by simply shaking it off and switching things up at minimal cost, whereas the higher parts of the pyramid include things that will be painful for threat actors to replace, and therefore have greater impact if we work to make them unavailable and thereby impose greater cost.

Note that “denying” doesn't just mean discovering something or making it public. Instead, it means (ideally) preventing the threat actor from using something that they rely on in their cyber operations, or at the very least, making it much harder or prohibitively expensive to continue using it. As in all things related to threat intel, this involves making our discoveries truly actionable for defenders.

As a side-note, every threat actor has different things they might find painful, depending on what’s important to them and how they operate. For example, an actor using bespoke self-developed tooling and relying on exploitation of 0-day vulnerabilities or supply chain vectors would be severely impacted just by having them exposed, but other actors might not be bothered at all if we merely figure out what tools they’re using – this is especially true if they’re not bringing anything with them in the first place (i.e., Living off the Land); if they’re using open-source or commercial offensive security software; or if they gain access to their targets via n-days, misconfigurations, or compromised credentials. Some particularly resourceful threat actors might not even be especially hindered by the loss of a few 0day vulnerabilities, treating it as the cost of doing business, and will only truly be impacted by robust mitigations that deny whole bug classes or even entire attack surfaces.

Anyway, while the Pyramid of Pain is an incredibly useful concept, impact to adversaries is only one metric to consider when gathering threat intelligence and maintaining our threat detection strategy. There are other scales by which one should measure the various qualities of threat detection artifacts. Some good prior examples are Steve Miller’s “Detectrum” approach, which shows how different types of artifacts lend themselves to different types of threat intelligence and detection work, and Jared Atkinson’s slightly similarly named Detection Spectrum, which places detections themselves on a scale that moves between precise (with low false positives but high false negatives) and broad (with high false positives but low false negatives).

An interesting aspect of the Pyramid of Pain is that the impact to threat actors when a certain type of artifact is burned often positively correlates with other completely different qualities of that artifact. For instance, the more painful the artifact is to lose – say, a bespoke tool – the more reliably we can use (a sample of) it as a source for robust indicators of compromise, while making it harder for the adversary to avoid detection through mere polymorphism. To this end, we can write YARA rules to detect common denominators between variants of the same tool and even employ code similarity techniques to deny an adversary of opportunities to use related tools against their potential targets.

However, there are some points at which this correlation between qualities isn’t necessarily true – for example, while the level of pain for losing access to tools corresponds to our ability to attribute activity involving that tool to a specific actor, the same isn’t true of TTPs, which are more likely to be shared by multiple actors and are therefore less attributable. This discrepancy leads me to the conclusion that we probably need at least a few more pyramids than the one we already have, to rank detection artifacts according to different metrics, and shed light on how they differ from one another.

At this point you might suspect that I am in fact an Egyptian pharaoh, or perhaps you have surmised that this blogpost is all a ruse to convince you to invest in my pyramid-themed pyramid scheme, but I hope you’ll disregard these suspicions and continue reading regardless. In fact, let’s drop the pyramid thing altogether and focus on other stackable structures instead.

So, without further ado, at the bottom of this post you will find an expanded list of pyramid and pyramid-like concepts you might consider incorporating in your threat detection program, to be used as axes (the mathematical kind, not the wood-chopping kind) by which to evaluate the various qualities of threat detection artifacts.

Some disclaimers (I was lying before about the lack of ado):

There are almost certainly other metrics I have failed to include here.

The rankings in each metric are arguable as they are a bit handwavey and based entirely on my own judgement, so I invite the reader to focus mostly on the metrics themselves, and you are more than welcome to rank things differently.

While not part of the original Pyramid of Pain, each of the following artifacts can be very painful to lose as well: people (hackers, tool developers, vulnerability researchers, etc.) or their personal information; 0-day vulnerabilities or exploits for them; and targets. I’ve included these as part of an expanded version of the OG pyramid.

The significance of losing access to a target network differs rather dramatically between threat actors – for example, an opportunistic cybercrime actor might be unphased by our denying them access to a single target (as there are many more cyber-fish in the cyber-sea), whereas for a state-sponsored espionage actor, long-term loss of access to a specific target might be devastating, depending on the identity of the target.

Individual targets do not have much long-term value as detection artifacts, in the sense that once an operation is blown and forensic analysis is complete, there isn’t much else to glean from the target environment unless the threat actor returns to the scene of the crime in the future. However, sets of targets do remain highly valuable as a detection artifact, since they teach us about an actor’s victimology and their preferred sectors of operation, allowing better risk management and supporting future attribution (h/t to Merav for this late addition).